British-Canadian calls for “urgent and forceful attention” to combat AI safety risks at Stockholm ceremony.

Preceded by orchestral Wagner and reflections on the atomic bomb, University of Toronto professor Geoffrey Hinton crossed the stage of the Stockholm Concert Hall and, with a firm handshake and a bow, officially accepted a Nobel Prize in Physics from the King of Sweden for his contributions to the field of artificial intelligence (AI).

“All of [AI’s] short-term risks require urgent and forceful attention from governments and international organizations.”Geoffrey Hinton

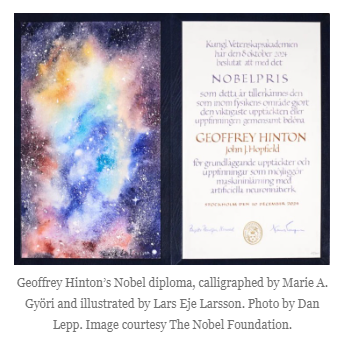

Hinton, dressed in a tuxedo and white bow tie, was co-awarded the prize alongside Princeton University’s John Hopfield “for foundational discoveries and inventions that enable machine learning (ML) with artificial neural networks.”

The British-Canadian citizen is the fourth person from a Canadian institution to win the prestigious science award in the past decade and will see his name go down in history with the likes of Albert Einstein, Niels Bohr, and Werner Heisenberg.

Astrid Söderbergh Widding, board chair of the Nobel Foundation, opened the ceremony with a speech addressing the Nobel Prize winners in each category. Söderbergh Widding transitioned from describing the Nobel Peace Prize recipient’s work in nuclear weapon disarmament to the Physics Prize.

“The atomic bomb recalls that basic research put into practice isn’t only for good,” Söderbergh Widding said in front of the laureates, Swedish Royalty, and a packed concert hall. “Still, it is through free fundamental research that science must continue to explore and expand the frontiers of human knowledge.”

“The unimaginable consequences that genetic technologies and AI may perhaps introduce can only be managed by truthful, rule-based international collaboration,” she later added.

When Professor Ellen Moons, chair of the Nobel Committee for Physics, introduced Hopfield and Hinton for their award, she echoed a similar caution on the technology.

“While they can aid humans in delivering fast answers, it is our collective responsibility to ensure that they are used in a safe and ethical way,” Moons said.

When Hinton was called up to receive his Nobel Prize from the King of Sweden, he received a lengthy ovation from the audience.

Hinton learned he had won the award in early October, prompting an outpouring of recognition from Canadian figures and institutions including the Vector Institute, the University of Toronto, Cohere co-founder and CEO Aidan Gomez, Prime Minister Justin Trudeau, and fellow ‘AI godfather’ Yoshua Bengio.

“He has done such an enormous amount for science and has been so inspired by previous winners,” Cohere co-founder Nick Frosst, one of Hinton’s first hires at Google Labs in Toronto, told BetaKit at the time. “It’s great to see his name up there.”

While Hinton has come to be known as one of the godfathers of AI, he has also spent recent years warning against the potentially disastrous effects of the systems he helped create, if left unchecked. Hinton made headlines in 2023 when he resigned from his post as a vice president and engineering fellow at Google to speak more freely about the potential risks of AI, and has also signed multiple open letters warning against the “risk of extinction” posed by AI and its unfettered development.

When BetaKit asked Hinton in October how he reconciles the award with his recent outspokenness on the dangers of AI, he said his advocacy for AI safety was also recognized by the Nobel committee.

“I think we need a serious effort to make sure it’s safe, because if we can keep it safe, it will be wonderful,” he added.

Earlier this week, Hinton held a Nobel Lecture for his fellow laureates on Boltzmann Machines—the algorithms that ultimately led to the deep learning systems behind modern AI, ML, and his eventual award. During the lecture, Hinton explained the thought process behind building on top of fellow Nobel winner Hopfield’s work and cracked at the optimism he and his collaborators felt while working on the system.

“When [co-author Terry Sejnowski] came up with this learning procedure for Boltzman Machines, we were completely convinced that must be how the brain works, and we decided we were going to get the Nobel Prize in Physiology or Medicine,” Hinton said in the lecture hall. “It never occurred to us that if it wasn’t how the brain works, we could get the Nobel Prize in Physics.”

The award ceremony was followed by an elaborate banquet, where Nobel Prize winners were given the opportunity to deliver their acceptance speeches. Hinton used his time to deliver a brief but urgent warning on the future of humanity and AI, and decried technology companies motivated by short-term profits. The following is the full transcript of Hinton’s banquet speech, courtesy of The Nobel Foundation.

This year the Nobel committees in Physics and Chemistry have recognized the dramatic progress being made in a new form of Artificial Intelligence that uses artificial neural networks to learn how to solve difficult computational problems. This new form of AI excels at modeling human intuition rather than human reasoning and it will enable us to create highly intelligent and knowledgeable assistants who will increase productivity in almost all industries. If the benefits of the increased productivity can be shared equally it will be a wonderful advance for all humanity.

Unfortunately, the rapid progress in AI comes with many short-term risks. It has already created divisive echo-chambers by offering people content that makes them indignant. It is already being used by authoritarian governments for massive surveillance and by cyber criminals for phishing attacks. In the near future AI may be used to create terrible new viruses and horrendous lethal weapons that decide by themselves who to kill or maim. All of these short-term risks require urgent and forceful attention from governments and international organizations.

There is also a longer term existential threat that will arise when we create digital beings that are more intelligent than ourselves. We have no idea whether we can stay in control. But we now have evidence that if they are created by companies motivated by short-term profits, our safety will not be the top priority. We urgently need research on how to prevent these new beings from wanting to take control. They are no longer science fiction.

Article Credit: betakit